- Sandboxed Runtime

- Classic Runtime

Within the sandboxed runtime, the Agent node gives the LLM the ability to execute commands autonomously: calling tools, running scripts, accessing the internal file system and external resources, and creating multimodal outputs.This comes with trade-offs: longer response times and higher token consumption. To handle simple tasks faster and more efficiently, you can disable these capabilities by turning off Agent Mode.

By default, you’d need to send all possible instructions to the model, describe the conditions, and let it decide which to follow—an approach that’s often unreliable.With Jinja2 templating, only the instructions matching the defined conditions are sent, ensuring predictable behavior and reducing token usage.Enable Memory to keep recent dialogues, so the LLM can answer follow-up questions coherently.A user message will be automatically added to pass the current user query and any uploaded files. This is because memory works by storing recent user-assistant exchanges. If the user query isn’t passed through a user message, there will be nothing to record on the user side.Window Size controls how many recent exchanges to retain. For example,

Choose a Model

Choose a model that best fits your task from your configured providers.After selection, you can adjust model parameters to control how it generates responses. Available parameters and presets vary by model.Write the Prompt

Instruct the model on how to process inputs and generate responses. Type/ to insert variables or resources in the file system, or @ to reference Dify tools.If you’re unsure where to start or want to refine existing prompts, try our AI-assisted prompt generator.

Specify Instructions and Messages

Define the system instruction and click Add Message to add user/assistant messages. They are all sent to the model in order in a single prompt.Think of it as chatting directly with the model:- System instructions set the rules for how the model should respond—its role, tone, and behavioral guidelines.

- User messages are what you send to the model—a question, request, or task for the model to work on.

- Assistant messages are the model’s responses.

Separate Inputs from Rules

Define the role and rules in the system instruction, then pass the actual task input in a user message. For example:Simulate Chat History

You might wonder: if assistant messages are the model’s responses, why would I add them manually?By adding alternating user and assistant messages, you create simulated chat history in the prompt. The model treats these as prior exchanges, which can help guide its behavior.Import Chat History from Upstream LLMs

Click Add Chat History to import chat history from an upstream Agent node. This lets the model know what happened upstream and continue from where that node left off.Chat history includes user, assistant, and . You can view it in an Agent node’scontext output variable.System instructions are not included, as they are node-specific.

- Without importing chat history, a downstream node only receives the upstream node’s final output, with no idea how it got there.

- With imported chat history, it sees the entire process: what the user asked, what tools were called, what results came back, and how the model reasoned through them.

Example 1: Process Files Generated by Upstream LLMs

Example 1: Process Files Generated by Upstream LLMs

Suppose two Agent nodes run in sequence: Agent A analyzes data and generates chart images, saving them to the sandbox’s output folder. Agent B creates a final report that includes these charts.If Agent B only receives Agent A’s final text output, it knows the analysis conclusions but doesn’t know what files were generated or where they’re stored.By importing Agent A’s chat history, Agent B sees the exact file paths from the tool messages and can access and embed the charts in its report.Here’s the complete message sequence Agent B sees after importing Agent A’s chat history:Agent B knows exactly which files exist and where they are, so it can embed them directly in the report.

Example 2: Output Artifacts to End Users

Example 2: Output Artifacts to End Users

Building on Example 1, suppose you want to deliver the generated PDF report to end users. Since artifacts cannot be directly exposed to end users, you need a third Agent node to extract the file.Agent C configuration:Agent C locates the file path from the imported chat history and outputs it as a file variable. You can then reference this variable in an Answer node or Output node to deliver the file to end users.

- Agent Mode: Disabled

- Structured Output: Enabled, with a file-type output variable

- Chat History: Import from Agent B

- User message: “Output the generated PDF.”

Create Dynamic Prompts Using Jinja2

Use Jinja2 templating to add conditionals, loops, and other logic to your prompts. For example, customize instructions depending on a variable’s value.Example: Conditional System Instruction by User Level

Example: Conditional System Instruction by User Level

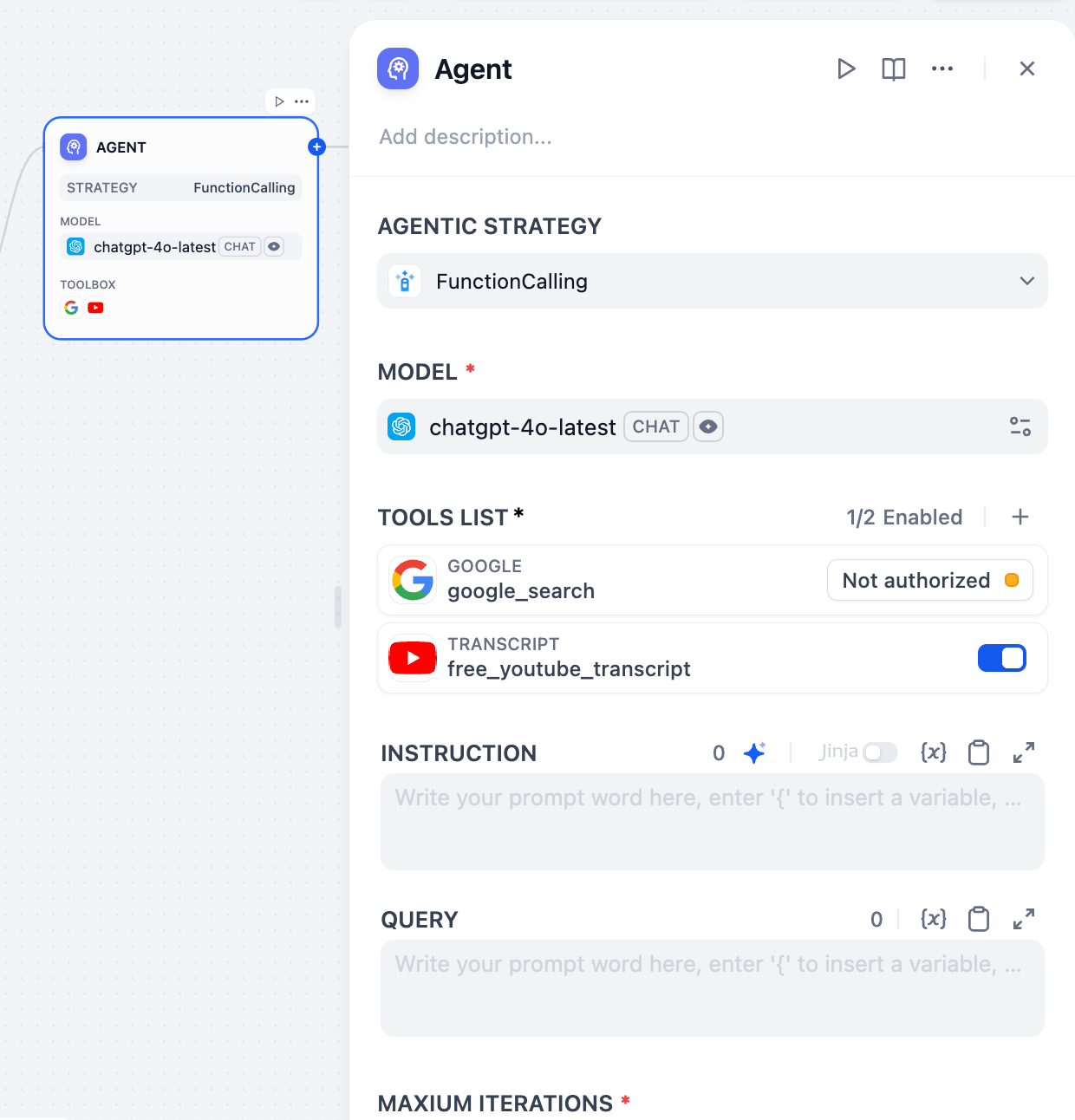

Enable Command Execution (Agent Mode)

Toggle on Agent Mode to let the model use the built-in bash tool to execute commands in the sandboxed runtime.This is the foundation for all advanced capabilities: when the model calls any other tools, performs file operations, runs scripts, or accesses external resources, it does so by calling the bash tool to execute the underlying commands.For quick, simple tasks that don’t require these capabilities, you can disable Agent Mode to get faster responses and lower token costs.Adjust Max IterationsMax Iterations in Advanced Settings limits how many times the model can repeat its reasoning-and-action cycle (think, call a tool, process the result) for a single request.Increase this value for complex, multi-step tasks that require multiple tool calls. Higher values increase latency and token costs.Enable Conversation Memory (Chatflows Only)

Memory is node-specific and doesn’t persist between different conversations.

5 keeps the last 5 user-query and LLM-response pairs.Add Context

In Advanced Settings > Context, provide the LLM with additional reference information to reduce hallucination and improve response accuracy.A typical pattern: pass retrieval results from a knowledge retrieval node for Retrieval-Augmented Generation (RAG).Process Multimodal Inputs

To let multimodal-capable models process images, audio, video, or documents, choose either approach:- Reference file variables directly in the prompt.

-

Enable Vision in Advanced Settings and select the file variable there.

Resolution controls the detail level for image processing only:

- High: Better accuracy for complex images but uses more tokens

- Low: Faster processing with fewer tokens for simple images

Separate Thinking and Tool Calling from Responses

To get a clean response without the model’s thinking process and tool calls, use thegenerations.content output variable.The generations variable itself includes all intermediate steps alongside the final response.Force Structured Output

Describing an output format in instructions can produce inconsistent results. For more reliable formatting, enable structured output to enforce a defined JSON schema.For models without native JSON support, Dify includes the schema in the prompt, but strict adherence is not guaranteed.

-

Next to Output Variables, toggle on Structured. A

structured_outputvariable will appear at the end of the output variable list. -

Click Configure to define the output schema using one of the following methods.

- Visual Editor: Define simple structures with a no-code interface. The corresponding JSON schema is generated automatically.

- JSON Schema: Directly write schemas for complex structures with nested objects, arrays, or validation rules.

- AI Generation: Describe needs in natural language and let AI generate the schema.

- JSON Import: Paste an existing JSON object to automatically generate the corresponding schema.

Handle Errors

Configure automatic retries for temporary issues (like network glitches), or a fallback error handling strategy to keep the workflow running if errors persist.